Feeling Fast, Delivering Slow: Why Your AI Adoption Still Hasn't Paid Off

Removing Impediments - the Unglamorous Path to AI-Assisted Development

Everyone wants to talk about AI productivity gains. Few want to talk about why most teams aren’t seeing them.

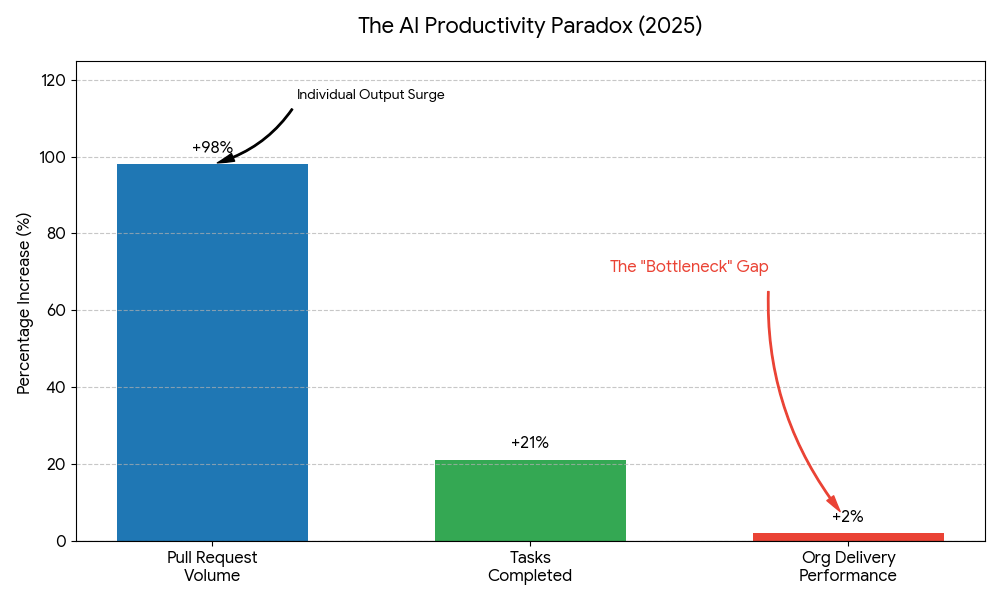

The problem isn’t the technology. Research from Faros AI analyzing over 10,000 developers found that while developers using AI complete 21% more tasks and merge 98% more pull requests (PR), companies see no measurable performance gains at the organizational level. The 2025 DORA report describes this clearly: AI is both a mirror and a multiplier—amplifying efficiency in cohesive organizations while exposing weaknesses in fragmented ones.

The challenge of successful AI adoption isn’t a tools problem—it’s a Value Stream Management problem. We’re bolting AI onto development practices that weren’t ready for it. You can’t 10x your code generation if your foundation can’t handle the load.

Understanding Where You Are: The Seven Team Archetypes

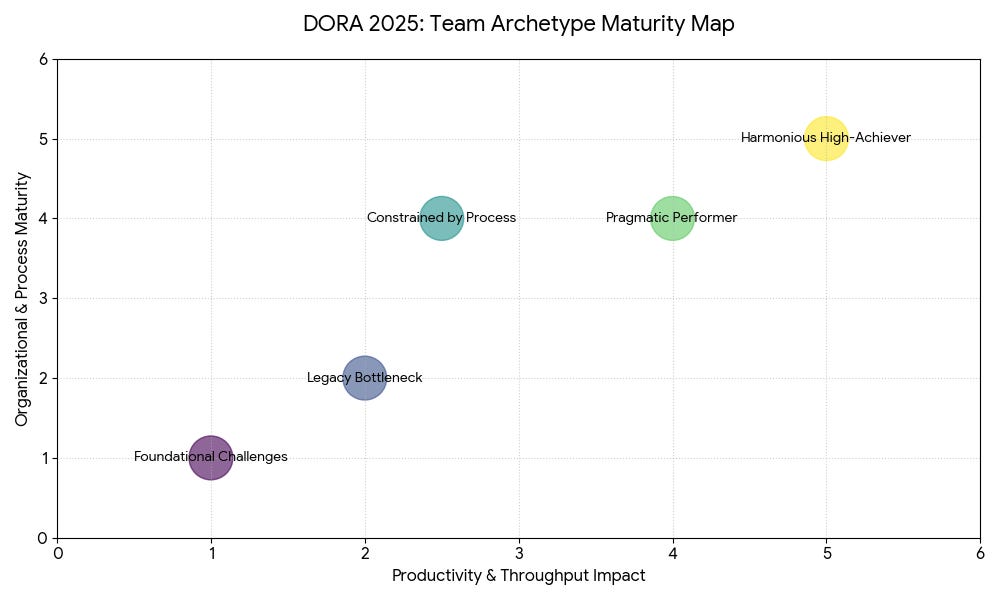

Before you can fix your impediments, you need to understand which ones are holding you back. The 2025 DORA report introduced seven distinct team archetypes that reveal how organizational health, team structure, and platform maturity influence performance outcomes:

Foundational Challenges - Teams in survival mode with significant process gaps

Legacy Bottleneck - Constantly reacting to unstable systems where AI makes individuals faster but outdated deployment systems and messy integrations eat up all those gains

Constrained by Process - Consumed by inefficient workflows

High Impact, Low Cadence - Producing quality work slowly

Stable and Methodical - Delivering deliberately with high quality

Pragmatic Performers - Impressive speed with functional environments

Harmonious High-Achievers - A virtuous cycle of sustainable excellence

Here’s the critical insight: each archetype experiences AI adoption differently and requires tailored intervention strategies. Teams with legacy bottlenecks may need architecture modernization before AI expansion. Teams suffering burnout need friction reduction and workload rebalancing. Without accurate team-level measurement mapped to these archetypes, enterprises misallocate AI investment—they might invest heavily in AI coding assistants for “Legacy Bottleneck” teams whose actual constraint is deployment pipeline fragility.

The speed-versus-stability trade-off is a myth. The best-performing teams achieve both high throughput and high stability simultaneously, while struggling teams often fail at both dimensions.

The Perception Gap: Feeling Fast vs. Being Fast

Here’s an uncomfortable truth: developers often feel dramatically more productive with AI while organizational metrics tell a different story. METR’s July 2025 study provides the most striking evidence of this perception gap.

When experienced developers with an average of 5 years in their projects used AI tools, they expected a 24% speedup but actually took 19% longer to complete tasks. Let that sink in: the perceived acceleration masked a real deceleration. Why? The researchers found AI-generated code requires fundamentally different scrutiny—it’s literalist, sometimes subtly off, and demands careful verification that takes time.

This perception paradox is dangerous because it feels like progress while hiding downstream problems. Developers report coding 3-5x faster with AI assistants, yet sprint velocities remain flat. The issue isn’t that AI doesn’t help—it’s that local productivity gains don’t automatically translate into organizational value delivery.

This is precisely why strengthening the fundamentals matters. When you feel fast but deliver slow, the problem isn’t effort—it’s impediments in your value stream eating the gains.

Strengthen Your Testing Guardrails First

AI will generate more code faster than your team ever has. That’s the promise and the problem. When Salesforce’s engineering team boosted code output by 30% with AI-assisted tooling, it created significant pressure on test coverage, validation workflows, and code review throughput.

Without robust testing infrastructure, you’re just accelerating technical debt. Analysis from Greptile in late 2025 showed lines of code per developer rose 76% overall from March to November, with pull request sizes increasing 33%. DX’s 2025 research notes that organizations treating AI code generation as a process challenge rather than a technology challenge achieve 3x better adoption rates.

Before you scale up AI adoption, you need:

Comprehensive unit and integration test coverage that catches regressions, not just exercises happy paths

Clear testing standards your team actually follows

Automated test suites that run fast enough to not be ignored

Property-based testing for edge cases AI commonly misses

Qodo’s 2025 State of AI Code Quality report found that 25% of developers estimate that 1 in 5 AI-generated suggestions contain factual or functional errors, with hallucinations remaining a key factor behind low confidence and review friction. AI-generated code looks plausible. It often runs. Whether it’s correct for your specific context is another question entirely. Your tests are the only scalable way to answer that question.

Know Your Architecture and Dependencies: The Context Problem

AI assistants don’t understand your codebase’s history, the subtle dependencies between modules, or why that seemingly weird pattern exists. Your developers need to. When code generation accelerates, architectural mistakes compound faster.

Here’s the data that should wake everyone up: According to Qodo’s 2025 research, 44% of developers who say AI degrades code quality blame context issues. This isn’t a minor problem—it’s the #1 reason for AI errors. When developers report context pain, they’re describing AI tools that miss critical relationships between modules, ignore architectural patterns, and generate code that looks correct in isolation but breaks the system.

The context gap is pervasive: 65% of developers say AI tools miss critical context during their most important tasks, and developers still spend 60-70% of their time just understanding code. Without context, AI doesn’t just break things—it breaks them in subtle, cascading ways that only surface weeks later.

Teams seeing success with AI have invested in:

Clear, accessible documentation of system architecture that serves as context for both AI and developers

Dependency mapping that shows what affects what across services

Explicit design patterns and when to use them

Shared understanding of the product domain

Context engineering—securely connecting AI tools to internal documentation and codebases

According to Questera’s 2025 best practices guide, the most effective developers use context-first thinking—providing AI with complete module context rather than just asking for code. If your team doesn’t deeply understand the codebase they’re modifying, AI becomes a force multiplier for confusion.

Improve Your Planning Process

Vague requirements were always a problem. With AI, they become expensive. AI will happily generate elegant solutions to the wrong problem, and it will do so very quickly. The faster you can produce code, the more important it becomes to produce the right code.

MIT technology report 2025 Developer Survey found that 65% of developers now use AI tools at least weekly. According to DX’s enterprise adoption research, the most valuable applications in order of perceived time savings are: stack trace analysis, refactoring existing code, mid-loop code generation, test case generation, and learning new techniques.

Better planning means:

More time spent clarifying requirements before coding starts

Breaking work into smaller, well-defined pieces that AI can handle reliably

Clear acceptance criteria that both humans and AI can work against

Quick feedback loops to catch misunderstandings early

AI doesn’t fix ambiguity. It scales it. Research from DEV Community in 2025 found that one of the biggest mistakes is generating large chunks of code and hoping they’ll “just work”—this usually creates hidden errors, broken dependencies, or missed edge cases.

Invest in Code Review Tools and Time: The Security Review Bottleneck

This is the bottleneck nobody anticipated, and it’s creating a security crisis. Faros AI’s research found PR review time increases 91% with AI adoption, revealing a critical bottleneck: human approval. AsyncSquadLabs’ November 2025 analysis confirms that while AI has dramatically accelerated the coding phase, code review remains stubbornly human-paced. Teams are now writing code faster than they can review it, creating a security review bottleneck where vulnerabilities and architectural mistakes can slip through under deadline pressure.

Human-written code comes with implicit context. You can often infer intent from structure. AI-generated code is literalist, sometimes subtly off, and requires different scrutiny. Understanding AI-generated code is fundamentally different from reviewing code you wrote yourself—when you write code, you’ve already reasoned through the logic, but with AI-generated code, you’re reverse-engineering someone else’s solution.

SmartDev’s October 2025 analysis found that manual review dependencies are widely recognized as a significant source of development delays, with teams waiting up to 4+ days for code reviews while deadlines loom. As AI generates more code, the bottleneck shifts from writing code to understanding code, local testing, and peer reviews.

This means:

Better code review tooling to handle increased volume

More reviewer time - not the same amount, MORE

Training on what to look for in AI-generated code specifically

Clear standards for when to accept versus refactor generated code

AI-assisted review tools to pre-screen common issues and security vulnerabilities

Qodo’s enterprise research shows that context-aware, test-aware, and standards-aware code review is essential. Multiple evaluations show AI reviewers can catch bugs and provide feedback at machine speed, responding within minutes instead of hours or days. But they work best as a first pass—an engineer-in-the-loop approach that balances automation with continuous human oversight for architectural decisions and security concerns.

Converge on Tools, Not Sprawl

There’s a new AI coding tool every week. Your team doesn’t need to try them all. When asked for the biggest challenges in team AI adoption, the 2025 DORA report found the number one factor mentioned by engineers is the lack of best practices and volatility of tools.

Tool sprawl leads to:

No accumulated expertise with any single tool

Wasted money on overlapping subscriptions

Knowledge fragmentation across the team

Constant context switching that kills productivity

Market analysis from October 2025 shows GitHub Copilot maintains approximately 42% market share among paid AI coding tools, followed by Cursor at 18% and Amazon Q Developer at 11%. A separate August 2025 survey found organizational adoption rates of 43% for Cursor and 37% for Copilot, showing the landscape is evolving rapidly.

But success isn’t about choosing the “best” tool—it’s about picking one and getting really good at it. Digital Ocean’s 2025 comparison guide notes that ~85% of developers use at least one AI tool in their workflow, but the teams succeeding are those who converge rather than constantly switching.

Pick a small set of tools that cover your needs. Let your team get good at them. The developer who has used the same AI assistant for six months, learned its quirks, and refined their prompting will outperform the developer who’s always chasing the newest model. Depth beats breadth.

Create Space for Experimentation Within Boundaries

Things are moving fast. You need room to experiment with new capabilities. But unstructured experimentation doesn’t scale.

The key is bounded exploration:

Dedicate specific time for trying new tools or techniques

Share findings with the team regularly through demos or retrospectives

Converge on practices so individual learnings compound

Research from Booking.com cited in the DORA report found that developer uptake of AI coding tools was initially uneven. When they invested in training developers to give AI assistants more explicit instructions and effective context, they saw up to 30% increases in merge requests and higher job satisfaction.

This is the differentiator between developers seeing consistent results and those constantly battling with AI. The successful ones aren’t trying new things every day. They’re refining their approach through repetition, building intuition about what works, and compounding small improvements over time.

Adoption Through Repetition and Shared Practice

Skills develop through practice, not novelty. The 2025 DORA research emphasizes that engineering excellence is no longer measured solely by speed or frequency, but by the harmony between human cognition, machine augmentation, and organizational design.

A team that converges on shared practices can:

Learn from each other’s experiences in structured ways

Build on previous solutions rather than starting from scratch

Develop shared vocabulary and patterns that accelerate communication

Actually get better over time instead of constantly resetting

Analysis from Leanware in November 2025 found that the most successful teams don’t just use AI tools—they pair them with experienced engineers who validate, refine, and maintain the code AI generates. This engineer-in-the-loop approach balances automation with continuous human oversight.

The team that’s fragmented across different tools, different models, and different approaches? They’re all learning in isolation, unable to benefit from collective refinement. The DORA report confirms that AI tends to deliver “local optimizations”—an engineer codes faster, a test suite runs quicker—but without value stream management, those wins don’t always roll up into business outcomes.

Research shows that positive AI experiences drive greater adoption. This leads to more sophisticated AI interaction skills, which in turn generate even better outcomes—creating a virtuous cycle.

The Path Forward: From Impediments to Impact

The unsexy truth about AI adoption is that it requires strengthening the fundamentals. Better tests. Clearer architecture and context. Stronger planning. More rigorous review with proper security safeguards. Convergent practice.

The 2025 DORA report makes this explicit: AI adoption is no longer the question—how teams transform their value streams around it will determine who actually thrives. The report introduces a new “rework rate” metric alongside the traditional DORA four, giving more visibility into the cost of defects and unplanned fixes that AI can inadvertently accelerate.

First, identify which team archetype you most closely resemble. Are you stuck in a “Legacy Bottleneck” where AI is making individuals faster while deployment systems eat the gains? Are you “Constrained by Process” where AI is creating more paperwork? Or are you on the path to becoming a “Pragmatic Performer” or “Harmonious High-Achiever”?

Then, address the perception gap. Your developers might feel 24% faster while actually being 19% slower. Measure what matters: end-to-end delivery time, rework rate, and organizational throughput—not just individual code generation speed.

Finally, invest in the fundamentals that turn AI from a productivity theater into genuine organizational advantage:

Testing infrastructure that can handle increased volume

Context engineering that gives AI the architectural awareness it needs

Review processes scaled to handle the security bottleneck

Tool convergence that builds expertise instead of fragmentation

Shared practices that compound learning across the team

Teams with an AI adoption strategy have reported significant development speed increases compared to those without one. But that strategy isn’t about AI—it’s about whether your development process can handle what AI makes possible.

Remove the impediments first. The productivity gains will follow.

Nail on the head with the feeling-fast-but-delivering-slow paradox. The perception gap is real and dangerous because teams think theyre crushing it while organizational throughput stays flat or even drops. The 91% increase in PR review time is the sleeper metric here. I've seen this exact bottleneck where teams generate code faster than they can verify it, so technical debt piles up silently. The context problem compounds this since AI-generated cod often looks right but breaks integration points nobody thought to test.